When I was young I was fascinated with the idea that the Coriolis effect—the concept in physics which explains why hurricanes rotate in opposing direction in the southern and northern hemispheres—could similarly be applied to common phenomenon like water disappearing down a bathtub drain. On my first trip to Cape Town many years ago I couldn’t wait to try this out, only to realize in my hotel bathroom that I had never actually got around to checking what direction water drains in the northern hemisphere before I left. So much for the considered rigor of science.

When I was young I was fascinated with the idea that the Coriolis effect—the concept in physics which explains why hurricanes rotate in opposing direction in the southern and northern hemispheres—could similarly be applied to common phenomenon like water disappearing down a bathtub drain. On my first trip to Cape Town many years ago I couldn’t wait to try this out, only to realize in my hotel bathroom that I had never actually got around to checking what direction water drains in the northern hemisphere before I left. So much for the considered rigor of science.

It turns out of course that the Coriolis effect, when applied on such a small scale, becomes negligible in the presence of more important factors such as the shape of your toilet bowl. And so, yet another one of popular culture’s most cherished myths is busted, and civilization advances ever so slightly.

Something that definitely does not run opposite south of the equator turns out to be cloud computing, though to my surprise conferences down under take a turn in the positive direction. I’ve just returned from a trip to Australia where I attended the 2nd Annual Future of Cloud Computing in the Financial Services, held last week, held in both Melbourne and Sydney. What impressed me is that most of the speakers were far beyond the blah-blah-blah-cloud rhetoric we still seem to hear so much, and focused instead on their real, day-to-day experiences with using cloud in the enterprise. It was as refreshing as a spring day in Sydney.

Greg Booker, CIO of ANZ Wealth, opened the conference with a provocative question. He simply asked who in the audience was in the finance or legal departments. Not a hand came up in the room. Now bear in mind this wasn’t Microsoft BUILD—most of the audience consisted of senior management types drawn from the banking and insurance community. But obviously cloud is still not front of mind for some very critical stakeholders that we need to engage.

Booker went on to illustrate why cross-department engagement is so vital to making the cloud a success in the enterprise. ANZ uses a commercial cloud provider to serve up most of its virtual desktops. Periodically, users would complain that their displays would appear rendered in foreign languages. Upon investigation they discovered that although the provider had deployed storage in-country, some desktop processing took place on a node in Japan, making this kind of a grey-area in terms of compliance with export restrictions on customer data. To complicate matters further, the provider would not be able to make any changes until the next maintenance window—an event which happened to be weeks away. IT cannot meet this kind of challenge alone. As Randy Fennel, General Manager, Engineering and Sustainability at Westpac put it succinctly, “(cloud) is a team sport.”

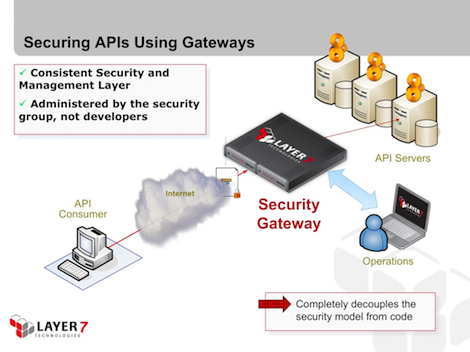

I was also struck by a number of insightful comments made by the participants concerning security. Rather than being shutdown by the challenges, they adopted a very pragmatic approach and got things done. Fennel remarked that Westpac’s two most popular APIs happen to be balance inquiry, followed by their ATM locator service. You would be hard pressed to think of a pair of services with more radically different security demands; this underscores the need for highly configurable API security and governance before these services go into production. He added that security must be a built-in attribute, one that must evolve with a constantly changing threat landscape or be left behind. This thought was echoed by Scott Watters, CIO of Zurich Financial Services, who added that we need to put more thought into moving security into applications. On all of these points I would agree, with the addition that security should be close to apps and loosely coupled in a configurable policy layer so that over time, you can easily address evolving risks and ever changing business requirements.

The entire day was probably best summed up by Fennel, who observed that “you can’t outsource responsibility and accountability.” Truer words have not been said in any conference, north or south.